Table of Contents

Introduction to Correlation Coefficient

How to Find Correlation Coefficient in R is a common question among those involved in data analysis. The correlation coefficient is a statistical measure used to determine the strength and direction of the relationship between two variables.

In data analysis, understanding this concept is essential for identifying patterns and making predictions. It quantifies how closely the two variables move in relation to each other, providing valuable insights into the data.

In mathematics and statistics, a coefficient is a numerical or constant value that is multiplied by a variable or another term in an algebraic expression or equation. It represents the degree or magnitude of a particular component within the expression.

What is Coefficient

Coefficients can appear in various contexts and have different meanings depending on the field of study. In general terms, a coefficient provides information about the relationship or interaction between different variables or factors in a mathematical equation or formula.

For example, in a linear equation such as y = mx + b, where “m” represents the slope of the line and “b” represents the y-intercept, both “m” and “b” are coefficients. The coefficient “m” determines the rate of change of the dependent variable (y) with respect to the independent variable (x), while the coefficient “b” determines the y-coordinate of the point where the line intersects the y-axis.

In statistics, coefficients often appear in regression analysis, where they represent the estimated effects of independent variables on a dependent variable. For instance, in a simple linear regression model y = β0 + β1x + ε, the coefficients β0 and β1 represent the intercept and slope of the regression line, respectively. They quantify the strength and direction of the relationship between the independent variable (x) and the dependent variable (y).

Coefficients can also appear in polynomial equations, Fourier series, and other mathematical expressions, where they play a crucial role in determining the overall behavior and properties of the equation. Depending on the context, coefficients may be real numbers, complex numbers, or even matrices in more advanced mathematical settings.

Understanding Correlation in Statistics

Correlation indicates the degree to which two or more variables change simultaneously. A positive correlation indicates that as one variable increases, the other variable also tends to increase, while a negative correlation means that as one variable increases, the other tends to decrease.

The correlation coefficient, symbolized by “r,” spans from -1 to 1. A value of -1 signifies a complete negative correlation, 1 represents a complete positive correlation, and 0 suggests no correlation.

Methods of Correlation

Correlation is a fundamental concept in statistics and data analysis that measures the relationship between two variables. It aids researchers and analysts in comprehending the correlation between alterations in one variable and those in another. There are several methods of correlation, each with its unique approach and applications.

The Pearson correlation coefficient

The Pearson correlation coefficient, often referred to as Pearson’s r, quantifies the linear association between two continuous variables. Its values range from -1 to +1: -1 denotes a flawless negative correlation, +1 signifies a perfect positive correlation, and 0 indicates no correlation.

Spearman’s Rank Correlation

Spearman’s rank correlation evaluates the monotonic connection between two variables, irrespective of whether it follows a linear pattern. Instead of using raw data, it ranks the variables and calculates the correlation based on the differences in ranks.

Kendall’s Tau

Kendall’s tau is another non-parametric measure of association that evaluates the similarity in the ordering of data points between two variables. It is particularly useful when dealing with ordinal data or when the assumptions of Pearson correlation are violated.

Understanding Correlation vs. Causation

It’s crucial to differentiate between correlation and causation. While correlation indicates a relationship between variables, it does not imply causation. Correlation simply suggests that changes in one variable are associated with changes in another, but it does not prove that one variable causes the other to change.

Methods for Assessing Correlation Significance

Determining whether a correlation coefficient is statistically significant involves hypothesis testing, confidence intervals, and assessing p-values. These methods help researchers determine whether the observed correlation is likely to occur due to chance.

Non-parametric Correlation Methods

Non-parametric correlation methods, such as Spearman’s rank correlation and Kendall’s tau, are valuable when data does not meet the assumptions of parametric tests. They provide robust measures of association without requiring the data to follow a specific distribution.

Correlation in Real-world Applications

Correlation finds applications in various fields, including business, medicine, and social sciences. It helps economists predict market trends, doctors assess the effectiveness of treatments, and psychologists understand human behavior.

Challenges and Considerations

Despite its usefulness, correlation analysis faces challenges such as dealing with outliers, interpreting results in complex datasets, and addressing sample size limitations. Researchers must be aware of these challenges and apply appropriate techniques to mitigate them.

Correlation and Machine Learning

In machine learning, correlation plays a vital role in feature selection, preprocessing, and algorithm design. Understanding the correlation between features helps improve model performance and interoperability.

Correlation in Time Series Analysis

In time series analysis, correlation helps identify patterns and dependencies over time. Autocorrelation measures the correlation between a variable and its lagged values, while seasonality and trend analysis uncover recurring patterns and long-term trends.

Ethics and Bias in Correlation Studies

Ethical considerations are essential in correlation studies to ensure fair and unbiased results. Researchers must address potential biases in data collection and interpretation, as well as consider the ethical implications of their findings.

Future Trends and Innovations

Advancements in correlation analysis methods, combined with the proliferation of big data and AI, open up new possibilities for innovation. Emerging trends include the integration of correlation with machine learning algorithms and its application in diverse domains.

Understanding Different Types of Correlation and Their Examples

When delving into statistical analysis, one often encounters correlation coefficients, which measure the strength and direction of the relationship between two variables. Interpreting these correlation coefficients is crucial for drawing meaningful insights from data. Here, we’ll explore the types of correlation and provide real-world examples to elucidate each type.

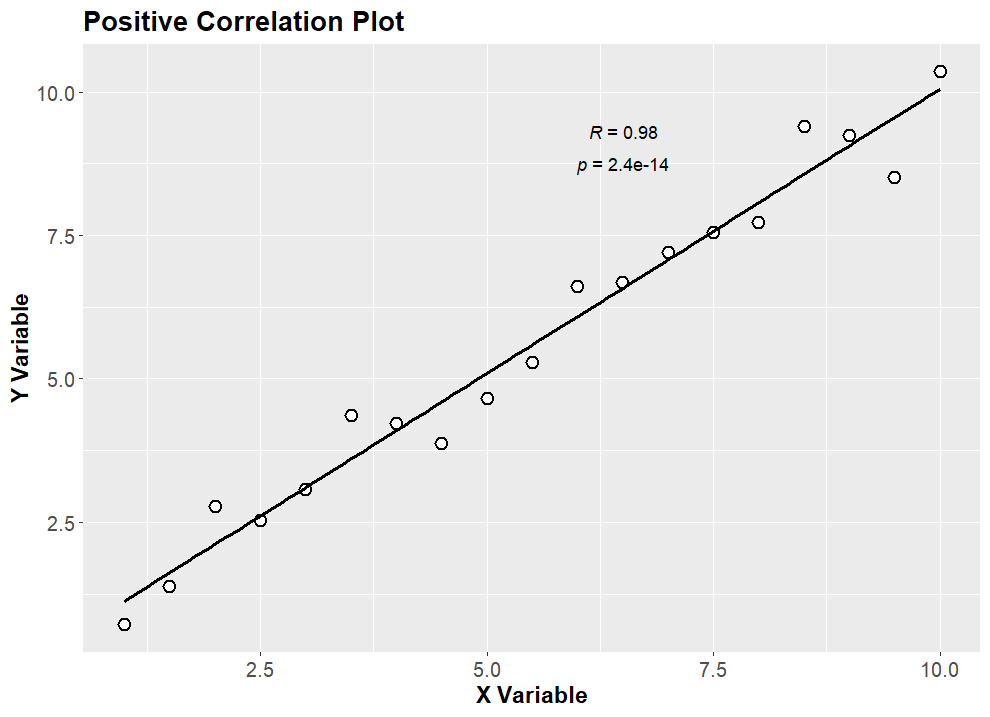

1. Positive Correlation

A positive correlation suggests that when one variable rises, the other variable typically follows suit and increases as well. This implies that both variables shift in tandem.

1.1 Example:

An increase in the number of hours spent studying usually leads to higher grades. As study time increases, grades typically improve. For instance, if a student studies for 4 hours a day, they may achieve a GPA of 3.5. If they increase their study time to 6 hours a day, their GPA may rise to 3.8. This demonstrates a positive correlation between study time and academic performance.

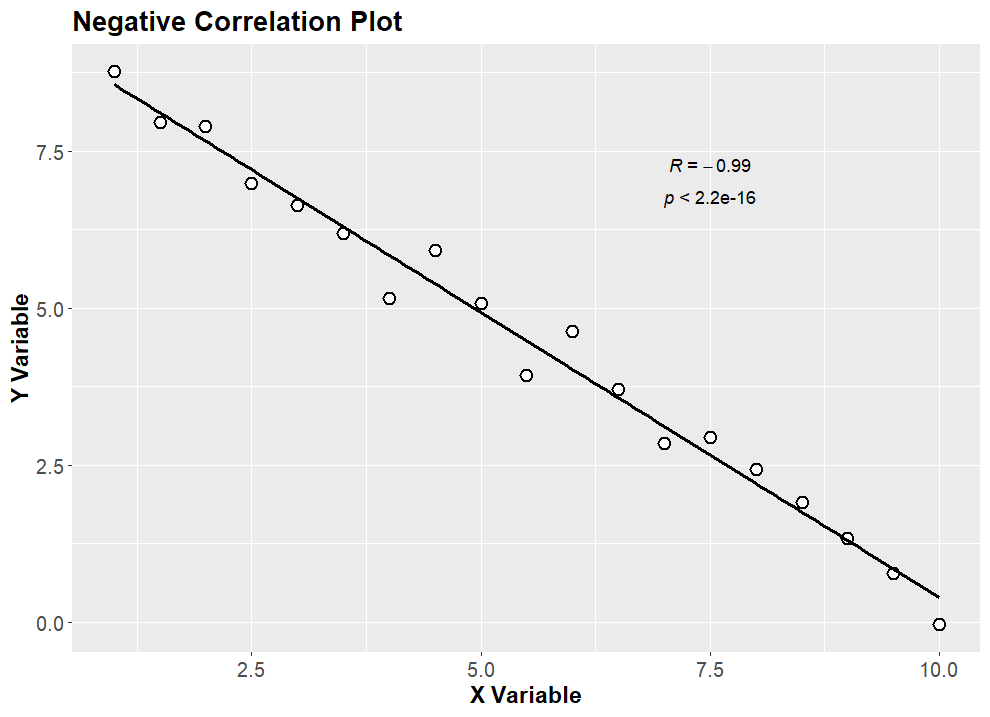

2. Negative Correlation

A negative correlation implies that when one variable increases, the other variable tends to decrease. In essence, the variables move in opposite directions.

2.1 Example:

The more frequently individuals exercise, the lower their body weight tends to be. In this case, as exercise frequency rises, body weight decreases, indicating a negative correlation between exercise frequency and body weight. For example, if a person exercises three times a week, they might weigh 160 pounds. However, if they increase their exercise frequency to five times a week, their weight might decrease to 150 pounds.

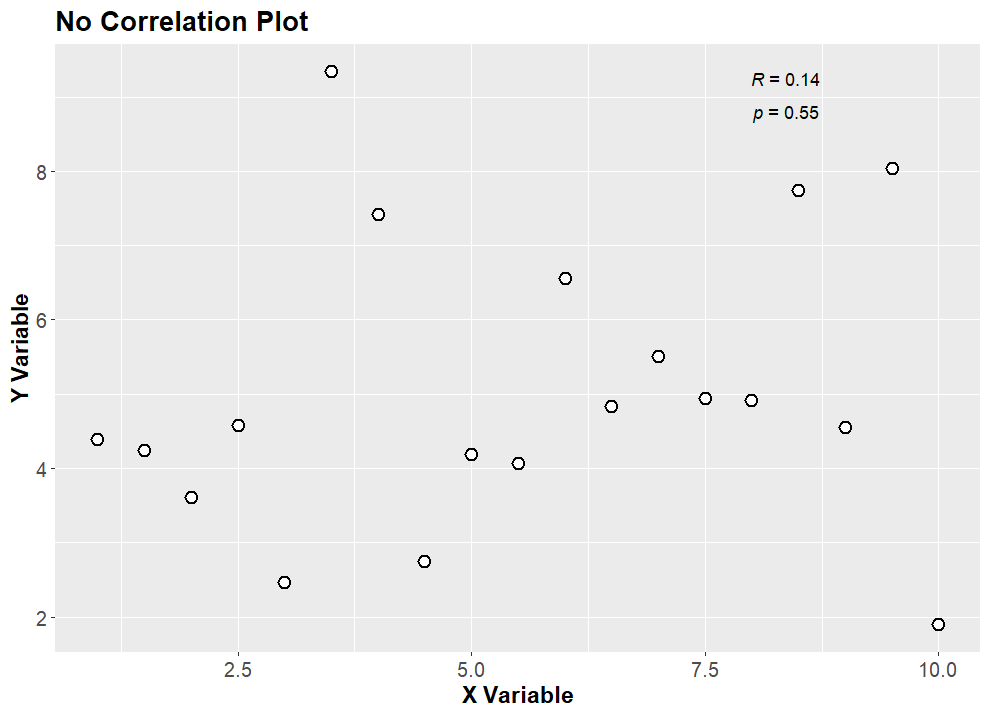

3. No Correlation

When there’s no correlation, it suggests that there’s no discernible connection between the variables. Changes in one variable do not affect the other, and there’s no consistent pattern observed.

3.1 Example:

There’s no correlation between the number of clouds in the sky and the price of stocks. The fluctuations in cloud cover don’t impact stock prices, demonstrating a lack of correlation between these two variables. For instance, on days with heavy cloud cover, the stock prices may remain stable, while on clear days, the stock prices may fluctuate unpredictably.

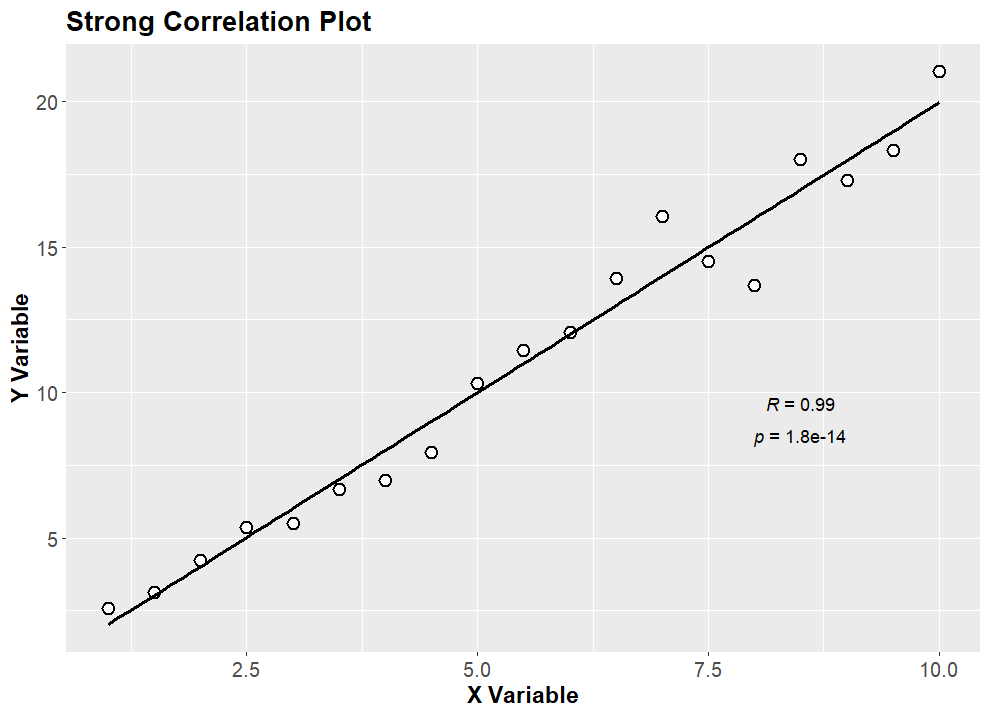

4. Strong Correlation

Strong correlation signifies a robust relationship between variables, where changes in one variable are highly indicative of changes in the other. This indicates a clear pattern between the variables.

4.1 Example:

There’s a strong positive correlation between temperature and ice cream sales. As temperatures rise, the sales of ice cream increase significantly. For example, when the temperature reaches 90°F, ice cream sales may surge to 500 units per day, whereas at 70°F, sales might only reach 200 units per day. This demonstrates a strong correlation between temperature and ice cream sales, where higher temperatures lead to higher sales.

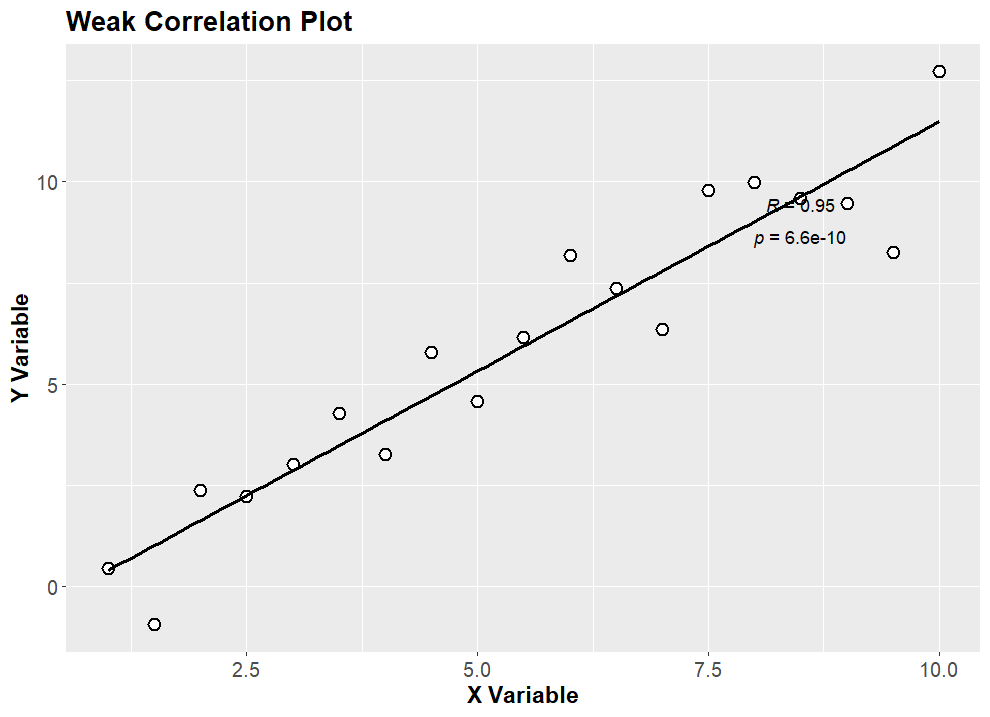

5. Weak Correlation

Weak correlation indicates a less pronounced relationship between variables, where changes in one variable may not consistently predict changes in the other. The relationship is not as clear or reliable compared to a strong correlation.

5.1 Example:

The correlation between shoe size and intelligence is weak. While there might be some correlation, it’s not substantial enough to make accurate predictions about intelligence based solely on shoe size. For example, individuals with larger shoe sizes may not necessarily have higher IQ scores. This indicates a weak correlation between these two variables.

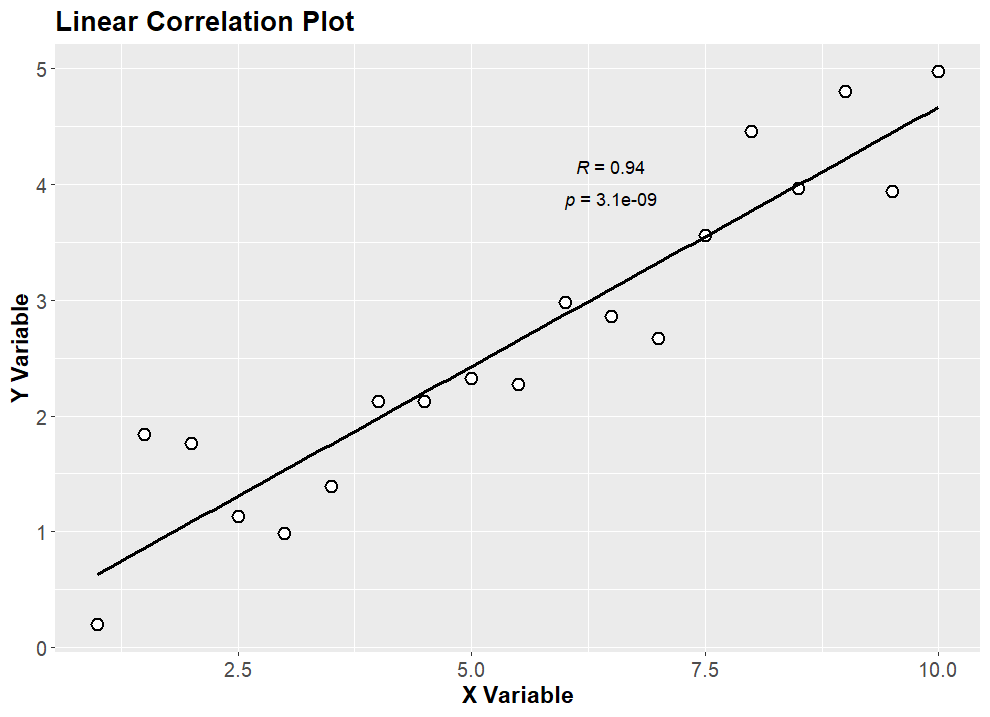

6. Linear Correlation

Linear correlation suggests that the relationship between variables can be represented by a straight line. This means that as one variable changes, the other variable changes proportionally.

6.1 Example:

The relationship between the number of years of experience and salary in a job may exhibit a linear correlation. Generally, as experience increases, salary tends to rise proportionally. For instance, a person with 5 years of experience may earn $50,000 per year, while someone with 10 years of experience may earn $100,000 per year. This demonstrates a linear correlation between experience and salary.

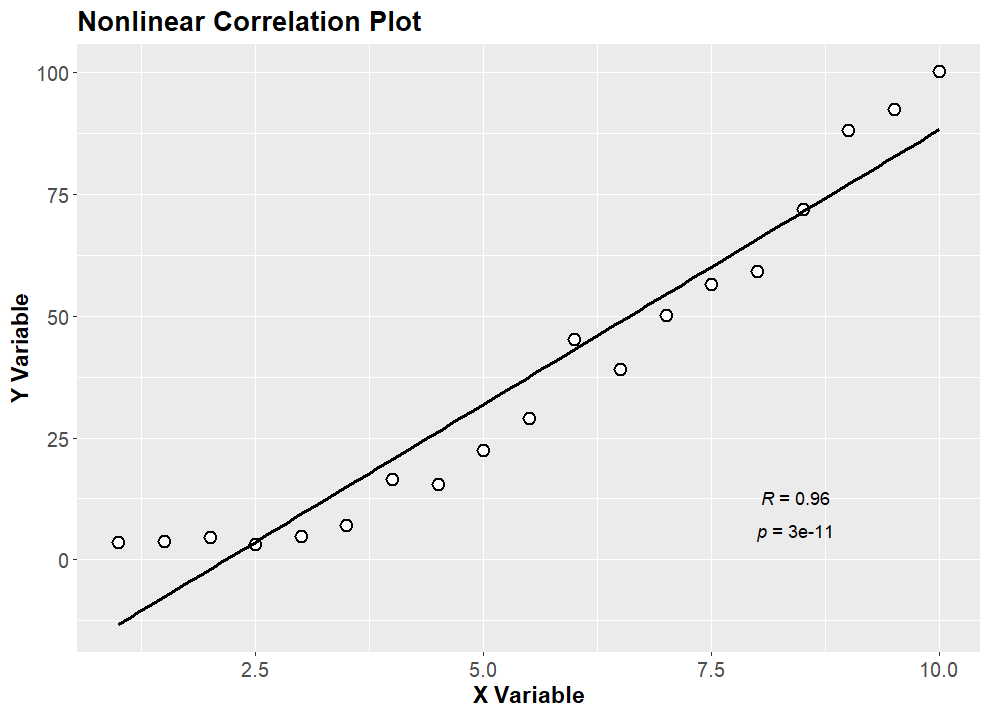

7. Nonlinear Correlation

Nonlinear correlation indicates a relationship between variables that don’t follow a straight line but rather a curve. This means that the relationship between the variables is more complex and cannot be represented by a straight line.

7.1 Example:

The connection between the quantity of fertilizer applied and crop yield could exhibit non-linear characteristics. Initially, increasing fertilizer may lead to significant yield increases, but at a certain point, additional fertilizer might not produce the same yield gains. For example, applying 100 kg of fertilizer may increase crop yield by 20%, but applying 200 kg may only increase yield by 10%. This demonstrates a nonlinear correlation between fertilizer use and crop yield.

Introduction to R Programming Language

R stands as a formidable tool in the realm of programming, providing a robust platform for statistical analysis and visualization. It is widely used for data analysis and statistical modeling due to its extensive range of built-in functions and packages. How to Find Correlation Coefficient in R is a common inquiry due to R’s popularity in statistical analysis. R provides a user-friendly interface through RStudio, making it accessible to both beginners and advanced users alike.

Methods How to Find Correlation Coefficient in R

There are several methods available in R for calculating the correlation coefficient. How to Find Correlation Coefficient in R involves various techniques:

1. Using cor() Function: The cor() function is a simple and efficient way to calculate the correlation coefficient between two variables or a matrix of variables. It computes the correlation matrix, which contains the pairwise correlation coefficients between variables.

2. Using cor.test() Function: The cor.test() function is used to perform hypothesis tests on correlation coefficients. How to Find Correlation Coefficient in R is facilitated by this function as it calculates the correlation coefficient along with its associated p-value, allowing users to assess the significance of the correlation.

3. Using Pearson’s Correlation Coefficient: Pearson correlation coefficient measures the linear relationship between two variables. How to Find Correlation Coefficient in R typically involves using Pearson’s method for normally distributed data with a linear relationship.

4. Using Spearman’s Correlation Coefficient: Spearman correlation coefficient assesses the monotonic relationship between two variables, which may not necessarily be linear. It is more robust to outliers and non-linear relationships compared to Pearson’s correlation coefficient.

Step-by-Step Guide to How to Find Correlation Coefficient in R

To find the correlation coefficient in R, follow these steps:

1. Installing R and RStudio: Download and install R from the Comprehensive R Archive Network (CRAN) website, and install RStudio for a user-friendly interface.

2. Importing Datasets: Load the datasets into R using functions like read.csv() or read.table(). Ensure that the datasets are properly formatted and contain the variables of interest.

3. Calculating Correlation Coefficient: Use the appropriate method (cor(), cor.test(), etc.) to calculate the correlation coefficient between the desired variables. Specify any additional parameters such as method (Pearson, Spearman, etc.) as needed.

4. Interpreting the Results: Analyze the correlation coefficient value and its significance to draw conclusions about the relationship between variables. How to Find Correlation Coefficient in R requires careful interpretation, considering factors such as the magnitude of the coefficient, its direction (positive or negative), and the associated p-value.

Practical Examples in “R”

Download the sample dataset for correlation analysis

Correlation Analysis “R” code

# <==== How to Find Correlation Coefficient in R Code =====>

# Load necessary libraries

library(readr) # for reading csv files

library(ggplot2) # for plotting

library(AgroR) # assuming plot_cor function is defined in this package

# Read the data from CSV file

correlation_data <- read_csv("correlation_data.csv")

# Extracting variables

x <- correlation_data$sample_1

y <- correlation_data$sample_2

# Perform correlation test

cor.test(x, y)

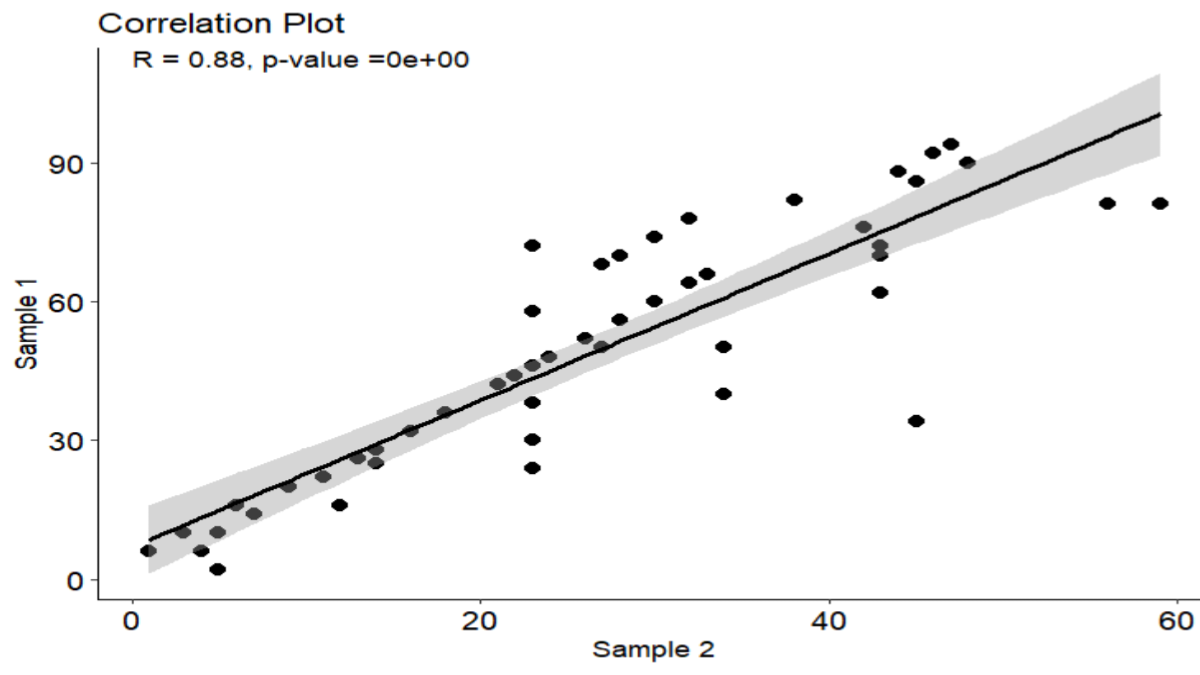

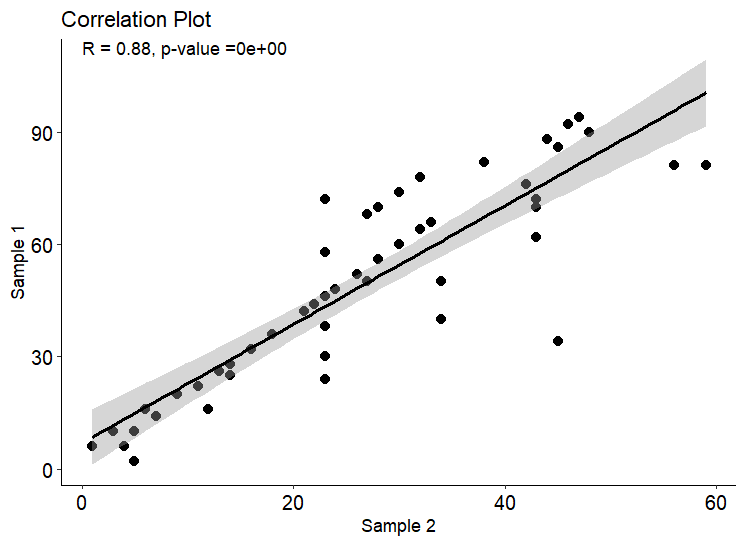

Plot correlation “R” code

# Plot correlation

plot_cor(x, y,

method = "pearson",

ylab = "Sample 1",

xlab = "Sample 2",

title = "Correlation Plot",

theme = theme_classic(),

pointsize = 4, # size to 4

shape = 20,

color = "black", # color to black

ic = TRUE)

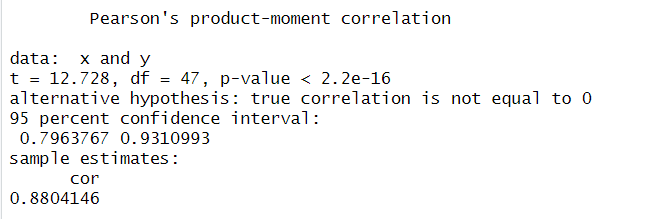

Result

The analysis employed Pearson’s product-moment correlation to explore the connection between variables x and y, revealing a robust positive correlation with an r-value of 0.880. This indicates a significant linear relationship. Further bolstering this relationship is the t-value of 12.728, supported by 47 degrees of freedom (df). The minuscule p-value (< 2.2e-16) strongly refutes the null hypothesis, suggesting that chance alone is highly improbable. With a 95% confidence interval spanning from 0.796 to 0.931, confidence in the correlation estimation is high. In summary, these results underscore a strong positive association between variables x and y, indicating that changes in one variable closely mirror changes in the other.

Advantages of Using R for Calculating Correlation Coefficient

R provides a wide range of built-in functions and packages specifically designed for statistical analysis, making it a versatile tool for data scientists and researchers.

It offers comprehensive visualization capabilities through packages like ggplot2, allowing users to create informative plots and graphs to illustrate correlation results. R is open-source and has a vast community of users and developers, ensuring continuous improvement and support through online forums, tutorials, and documentation.

Limitations and Considerations

While R is powerful, it may have a steep learning curve for beginners, particularly those without prior programming experience. However, there are plenty of resources available, such as tutorials, books, and online courses, to support users in learning R.

Interpretation of correlation results requires caution, as correlation does not imply causation. It is essential to consider potential confounding variables and verify assumptions such as linearity and homoscedasticity before drawing conclusions from correlation analyses.

Large datasets may require efficient coding practices and memory management to avoid performance issues in R. Users should familiarize themselves with optimization techniques and data manipulation functions to handle big data effectively.

Conclusion

To summarize, mastering the technique of calculating correlation coefficients in R is imperative for both data analysts and researchers alike. By utilizing the various methods and functions available in R, users can efficiently analyze relationships between variables and gain valuable insights into their data.

Whether you are a beginner or an experienced data scientist, mastering correlation analysis in R can enhance your ability to extract meaningful information from datasets and make informed decisions based on data-driven insights. Understanding the various types of correlation and their implications is crucial for accurate data analysis and interpretation.

Whether it’s positive, negative, strong, or weak correlation, recognizing these patterns enables researchers and analysts to draw meaningful conclusions from their data. By leveraging various correlation methods and addressing associated challenges, researchers can uncover valuable information that informs decision-making and drives innovation across disciplines

FAQs

1. How do I interpret a correlation coefficient? A correlation coefficient close to 1 or -1 indicates a strong relationship between variables, while a coefficient close to 0 suggests a weak or no relationship.

2. Can I use R for other types of statistical analysis? Yes, R offers a wide range of statistical techniques beyond calculating correlation coefficients, including regression analysis, hypothesis testing, and data visualization.

3. What precautions should I take when interpreting correlation results? Avoid inferring causation from correlation, consider potential confounding variables, and verify assumptions such as linearity and homoscedasticity.

4. Is R suitable for beginners in statistical analysis? While R may have a learning curve, there are plenty of resources available, such as tutorials, books, and online communities, to support beginners in mastering statistical analysis with R.

5. What does it mean if there’s no correlation between variables? If there’s no correlation, changes in one variable do not predict or influence changes in the other variable.

6. Can correlation imply causation? No, correlation does not imply causation. Even if two variables are correlated, it doesn’t necessarily mean that changes in one variable cause changes in the other.

7. How can correlation analysis be useful in real-life scenarios? Correlation analysis helps in understanding the relationships between various factors, which can be valuable in fields such as economics, psychology, and healthcare for making informed decisions.

8. Can correlation prove causation? No, correlation measures the relationship between variables but does not establish causation. Additional evidence and experiments are needed to determine causality.

9. What is the difference between Pearson correlation and Spearman correlation? Pearson correlation assesses linear relationships between continuous variables, while Spearman correlation evaluates monotonic relationships based on ranked data.

10. When should I use non-parametric correlation methods? Non-parametric methods like Spearman’s rank correlation and Kendall’s tau are suitable when data does not meet the assumptions of parametric tests or when dealing with ordinal data.

Read More